- 浏览: 828416 次

- 性别:

- 来自: lanzhou

-

文章分类

最新评论

-

liu346435400:

楼主讲了实话啊,中国程序员的现状,也是只见中国程序员拼死拼活的 ...

中国的程序员为什么这么辛苦 -

qw8226718:

国内ASP.NET下功能比较完善,优化比较好的Spacebui ...

国内外开源sns源码大全 -

dotjar:

敢问兰州的大哥,Prism 现在在12.04LTS上可用么?我 ...

最佳 Ubuntu 下 WebQQ 聊天体验 -

coralsea:

兄弟,卫星通信不是这么简单的,单向接收卫星广播信号不需要太大的 ...

Google 上网 -

txin0814:

我成功安装chrome frame后 在IE地址栏前加上cf: ...

IE中使用Google Chrome Frame运行HTML 5

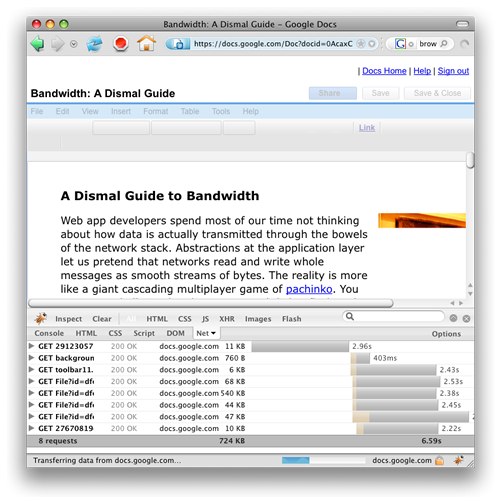

Web app developers spend most of our time not thinking about how data is actually transmitted through the bowels of the network stack. Abstractions at the application layer let us pretend that networks read and write whole messages as smooth streams of bytes. Generally this is a good thing. But knowing what's going underneath is crucial to performance tuning and application design. The character of our users' internet connections is changing and some of the rules of thumb we rely on may need to be revised.

Web app developers spend most of our time not thinking about how data is actually transmitted through the bowels of the network stack. Abstractions at the application layer let us pretend that networks read and write whole messages as smooth streams of bytes. Generally this is a good thing. But knowing what's going underneath is crucial to performance tuning and application design. The character of our users' internet connections is changing and some of the rules of thumb we rely on may need to be revised.

In reality, the Internet is more like a giant cascading multiplayer game of pachinko. You pour some balls in, they bounce around, lights flash and —usually— they come out in the right order on the other side of the world.

What we talk about, when we talk about bandwidth

It's common to talk about network connections solely in terms of "bandwidth". Users are segmented into the high-bandwidth who get the best experience, and low-bandwidth users in the backwoods. We hope some day everyone will be high-bandwidth and we won't have to worry about it anymore.

That mental shorthand served when users had reasonably consistent wired connections and their computers ran one application at a time. But it's like talking only about the top speed of a car or the MHz of a computer. Latency and asymmetry matter at least as much as the notional bits-per-second and I argue that they are becoming even more important. The quality of the "last mile" of network between users and the backbone is in some ways getting worse as people ditch their copper wires for shared wifi and mobile towers, and clog their uplinks with video chat.

It's a rough world out there, and we need to to a better job of thinking about and testing under realistic network conditions. A better mental model of bandwidth should include:

- packets-per-second

- packet latency

- upstream vs downstream

Packets, not bytes

The quantum of internet transmission is not the bit or the byte, it's the packet. Everything that happens on the 'net happens as discrete pachinko balls of regular sizes. A message of N bytes is chopped into ceil(N / 1460) packets [1] which are then sent willy-nilly. That means there is little to no difference between sending 1 byte or 1,000. It also means that sending 1,461 bytes is twice the work of sending 1,460: two packets have to be sent, received, reassembled, and acknowledged.

Packet #1 Payload

.....................................................................

.....................................................................

.....................................................................

.....................................................................

.....................................................................

.....................................................................

.....................................................................

.....................................................................

.....................................................................

.....................................................................

.....................................................................

.....................................................................

.....................................................................

.....................................................................

.....................................................................

.....................................................................

.....................................................................

.....................................................................

.....................................................................

.....................................................................

...........................................................

Packet #2 Payload

.

Listing 0: Byte 1,461 aka The Byte of Doom

Crossing the packet line in HTTP is very easy to do without knowing it. Suppose your application uses a third-party web analytics library which, like most analytics libraries, stores a big hunk of data about the user inside long-lived cookie tied to your domain. Suppose you also stuff a little bit of data into the cookie too. This cookie data is thereafter echoed back to your web server upon each request. The boilerplate HTTP headers (Accept, User-agent, etc) sent by every modern browser take up a few hundred more bytes. Add in the actual URL, Referer header, query parameters... and you're dead. There is also the little-known fact that browsers split certain POST requests into at least two packets regardless of the size of the message.

One packet, more or less, who cares? For one, none of your fancy caching and CDNs can help the client send data upstream. TCP slow-start means that the client will wait for acknowledgement of the first packet before sending the second. And as we'll see below, that extra packet can make a large difference in the responsiveness of your app when it's compounded by latency and narrow upstream connections.

Packet Latency

Packet latency is the time it takes a packet to wind through the wires and hops between points A and B. It is roughly a function of the physical distance (at 2/3 of the speed of light) plus the time the packet spends queued up inside various network devices along the way. A typical packet sent on the 'net backbone between San Francisco and New York will take about 60 milliseconds. But the latency of a user's last-mile internet connection can vary enormously [2]. Maybe it's a hot day and their router is running slowly. The EDGE mobile network has a best-case latency of 150msec and a real-world average of 500msec. There is a semi-famous rant from 1996 complaining about 100msec latency from substandard telephone modems. If only.

Packet loss

Packet loss manifests as packet latency. The odds are decent that a couple packets that made up the copy of this article you are reading got lost along the way. Maybe they had a collision, maybe they stopped to have a beer and forgot. The sending end then has to notice that a packet has not been acknowledged and re-transmit.

Wireless home networks are becoming the norm and they are unfortunately very susceptible to interference from devices sitting on the 2.4GHz band, like microwaves and baby monitors. They are also notorious for cross-vendor incompatibilities. Another dirty secret is that consumer-grade wifi devices you'll find in cafés and small offices don't do traffic shaping. All it takes is one user watching a video to flood the uplink.

Upstream < Downstream

Internet providers lie. That "6 Megabit" cable internet connection is actually 6mbps down and 1mbps up. The bandwidth reserved for upstream transmission is often 20% or less of the total available. This was an almost defensible thing to do until users started file sharing, VOIPing, video chatting, etc en masse. Even though users still pull more information down than they send up, the asymmetry of their connections means that the upstream is a chokepoint that will probably get worse for a long time.

A Dismal Testing Harness

Figure 0: It's popcorn for dinner tonight, my love. I'm doing science!

We need a way to simulate high latency, variable latency, limited packet rate, and packet loss. In the olden days a good way to test the performance of a system through a bad connection was to configure the switch port to run at half-duplex. Sometimes we even did such testing on purpose. :) Tor is pretty good for simulating a crappy connection but it only works for publicly-accessible sites. Microwave ovens consistently cause packet loss (my parents' old monster kills wifi at 20 paces) but it's a waste of electricity.

The ipfw on Mac and FreeBSD comes in handy for local testing. The command below will approximate an iPhone on the EDGE network with a 350kbit/sec throttle, 5% packet loss rate and 500msecs latency. Use sudo ipfw flush to deactivate the rules when you are done.

$ sudo ipfw pipe 1 config bw 350kbit/s plr 0.05 delay 500ms

$ sudo ipfw add pipe 1 dst-port http

Here's another that will randomly drop half of all DNS requests. Have fun with that one.

$ sudo ipfw pipe 2 config plr 0.5

$ sudo ipfw add pipe 2 dst-port 53

To measure the effects of latency and packet loss I chose a highly-cached 130KB file from Yahoo's servers. I ran a script to download it as many times as possible in 5 minutes under various ipfw rules [3]. The "baseline" runs were the control with no ipfw restrictions or interference.

Figure 1: The effect of packet latency on download speed

Figure 2: Effect of packet loss on download speed

Just 100 milliseconds of packet latency is enough to cause a smallish file to download in an average of 1500 milliseconds instead of 350 milliseconds. And that's not the worst part: the individual download times ranged from 1,000 to 3,000 milliseconds. Software that's consistently slow can be endured. Software that halts for no obvious reason is maddening.

Figure 3: Extreme volatility of response times during packet loss.

So, latency sucks. Now what?

Yahoo's web performance guidelines are still the most complete resource around, and backed up by real-world data. The key advice is to reduce the number of HTTP requests, reduce the amount of data sent, and to order requests in ways that use the observed behavior of browsers to best effect. However there is a simplification which buckets users into high/low/mobile categories. This doesn't necessarily address poor-quality bandwidth across all classes of user. The user's connection quality is often very bad and getting worse, which changes the calculus of what techniques to employ. In particular we should also take into account that:

- Upstream packets are almost always expensive.

- Any client can have high or low overall bandwidth.

- High latency is not an error condition, it's a fact of life.

- TCP connections and DNS lookups are expensive under high latency.

- Variable latency is in some ways worse than low bandwidth.

Assuming that a large but unknown percentage of your users labor under adverse network conditions, here are some things you can do:

- To keep your user's HTTP requests down to one packet, stay within a budget of about 800 bytes for cookies and URLs. Note that every byte of the URL counts twice: once for the URL and once for the Referer header on subsequent clicks. An interesting technique is to store app state that doesn't need to go to the server in fragment identifiers instead of query string parameters, e.g.

/blah#foo=barinstead of/blah?foo=bar. Nothing after the # mark is sent to the server. - If your app sends largish amounts of data upstream (excluding images, which are already compressed), consider implementing client-side compression. It's possible to get 1.5:1 compression with a simple LZW+Base64 function; if you're willing to monkey with ActionScript you could probably do real gzip compression.

-

YSlow says you should flush() early and put Javascript at the bottom. The reasoning is sound: get the HTML <head> portion out as quickly as possible so the browser can start downloading any referenced stylesheets and images. On the other hand, JS is supposed to go on the bottom because script tags halt parallel downloads. The trouble comes when your page arrives in pieces over a long period of time: the HTML and CSS are mostly there, maybe some images, but the JS is lost in the ether. That means the application may look like it's ready to go but actually isn't — the click handlers and logic and ajax includes haven't arrived yet.

Figure 4: docs is loading slowly... dare I click?Maybe in addition to the CSS/HTML/Javascript sandwich you could stuff a minimal version of the UI into the first 1-3KB, which gets replaced by the full version. Google Docs presents document contents as quickly as possible but disables the buttons until its sanity checks pass. Yahoo's home page does something similar.

This won't do for heavier applications, or those that don't have a lot of passive text to distract the user with while frantic work happens offstage. Gmail compromises with a loading screen which times out after X seconds. On timeout it asks the user to choose whether to reload or use their lite version.

- Have a plan for disaster: what should happen when one of your scripts or styles or data blobs never arrives? Worse, what if the user's cached copy is corrupted? How do you detect it? Do you retry or fail? A quick win might be to add a checksum/eval step to your javascript and stylesheets.

- We also recommend that you should make as much CSS and Javascript as possible external and to parallelize HTTP requests. But is it wise to do more DNS lookups and open new TCP connections under very high latency? If each new connection takes a couple seconds to establish, it may be better to inline as much as possible.

- The trick is how to decide that an arbitrary user is suffering high latency. For mobile users you can pretty much take high latency as a given [4]. Armed with per-IP statistics on client network latency from bullet #4 above, you can build a lookup table of high-latency subnets and handle requests from those subnets differently. For example if your servers are in Seattle it's a good bet that clients in the 200.0.0.0/8 subnet will be slow. 200.* is for Brasil but the point is that you don't need to know it's for Brasil or iPhone or whatever — you're just acting on observed behavior. Handling individual users from "fast" subnets who happen to have high latency is a taller order. It may be possible to get information from the socket layer about how long it took to establish the initial connection. I don't know the answer yet but there is promising research here and there.

- A good technique that seems to go in and out of fashion is KeepAlive. Modern high-end load balancers will try to keep the TCP connection alive between themselves and the client, no matter what, while also honoring whatever KeepAlive behavior the webserver asks for. This saves expensive TCP connection setup and teardown without tying up expensive webserver processes (the reason why some people turn it off). There's no reason why you couldn't do the same with a software load balancer / proxy like Varnish.

This article is the first in a series and part of ongoing research on bandwidth and web app performance. It's still early in our research, but we chose to share what we've found early so you can join us on our journey of discovery. Next, we will dig deeper into some of the open questions we've posed, examine real-world performance in the face of high latency and packet loss, and suggest more techniques on how to make your apps work better in adverse conditions based on the data we collect.

Carlos Bueno

Software Engineer, Yahoo! Mail

Read more about how to optimize your web site performance with the Yahoo! performance guidelines.

发表评论

-

十八个绝招把你从压力中营救出来

2010-03-08 10:34 937面对目前的工作与生活,你是否感觉到快要被逼疯了,来自工作的,家 ... -

Chrome扩展页面无法访问的解决办法

2010-03-03 09:04 1123Google推出Chrome扩展页面后有些中国的网友可能访 ... -

程序员礼仪小知识

2010-02-28 18:37 872常用应酬语: ... -

How GitHub Works

2010-02-22 07:53 730Ryan wrote a really great comme ... -

XXXX对80后的30个忠告

2010-02-09 11:10 7401、一个年轻人,如果 ... -

汇总Windows7系统常见5个问题和解决方法

2010-01-27 10:08 9241、DVD音频问题 微软改进了Windows7的硬件 ... -

MHDD找不到硬盘的解决方案

2010-01-27 09:48 4508硬盘要接在SATA0和SATA1上, 只认两个通道. 并且 ... -

NetBeans中文乱码解决办法

2010-01-15 07:56 2196在Windows 和Linux(Fedora/Ubuntu/ ... -

时间管理的6条黄金法则

2009-11-22 06:52 1093“时间就是金钱,效� ... -

从15个小动作猜准上司心思

2009-11-22 06:44 921察言观色是一切人情往� ... -

Fixing Poor MySQL Default Configuration Values

2009-11-15 13:24 914I've recently been accumulating ... -

100 Terrific Tips & Tools for Blogging Librarians

2009-11-14 09:43 2170As you prepare for a career as ... -

基本交际用语

2009-11-10 13:04 732日常生活中少不了要面对各种各样的场景和形 ... -

送你一副巧嘴——实用交际用语

2009-11-10 13:02 1618送你一副巧嘴 现代中� ... -

职场红人必读超级商务英语句子

2009-11-09 23:29 8141 I've come to make sure tha ... -

7 Things To Do After Installing Windows 7

2009-11-09 08:38 8121. Reinstall 7 if you purchased ... -

做个给WIN7减肥的批处理的想法,方便去实施

2009-11-08 09:34 2383首先 开启 Administrator 用户 删除其他用户!这 ... -

Windows 7超级实用的快速操作技巧

2009-11-08 07:35 960如果你已经升级到 Window ... -

使windows7更好用,10个很有用的Win7技巧

2009-11-08 07:29 1184没错,这些都是Windows 7带给我们的新东西,而且你很有必 ... -

电脑利用firefox模拟访问WAP版网站

2009-11-05 11:27 4630最近由于一些项目的原因,需要使用手机访问一些wap网站,从而参 ...

相关推荐

Tutorial #8 - Setting Up An Activity The Tactics of Fragments Tutorial #9 - Starting Our Fragments Swiping with ViewPager Tutorial #10 - Rigging Up a ViewPager Resource Sets and Configurations ...

That’s where this book comes in Spanning 466 pages, The Busy Coder’s Guide to Android Development covers a wide range of Android capabilities and APIs, from creating simple user interfaces, to ...

Wolfgang Engel’s GPU Pro 360 Guide to Geometry Manipulation gathers all the cutting-edge information from his previous seven GPU Pro volumes into a convenient single source anthology that covers ...

Bandwidth

bandwidth management

The available bandwidth (avail-bw) of a network path is an important performance metric and its end-to-end estimation has recently received significant attention. Previous work focused on the ...

现代计算机CPU Cache设计经典论文

带宽控制软件Bandwidth Splitter 1.07 破解版

BandwidthTest 一个测试路由器的软件

SoftPerfect Bandwidth Manager 使用教程

The sigma delta conversion technique has been in ... This application note is intended to give an engineer with little or no sigma delta background an overview of how a sigma delta converter works

SoftPerfect Bandwidth Manager Standard 2.9.10 英文官方原版,当前最新版 2011-8-21 使用感觉不错的,请购买正版$99 License name: Pierre MAUCLAIR License code: HTWX5-VK37S-CK2MG-AZBJQ-5LLFC-9QYMN-9TQFT-3...

some countries, ISP's and businesses cannot afford to shove a router in on their network, connect it to the net and hope for the best. Network administrators must draft policies regarding the desired ...

This paper describes a new control algorithm which can enhance the dynamics of a ... the bandwidth of the current controller was enhanced up to 250 Hz, and that of the speed controller was up to 50 Hz.

一本很经典的调制解调书籍,美国加洲大学用过这个教材,航空部也用过!!!我的留美导师推荐的!

CUDA高性能编程之驱动程序编写之bandwidthTEST示例,适合初学者学习

经测试可用的Bandwidth Splitter 1.30 汉化破解版 5000用户,配合isa2004/2006可得到很强大的带宽控制功能

Bandwidth Splitter 2010 For Microsoft Forefront TMG 2010。 Bandwidth Splitter 1.32 最新破解版本。本人亲自测试可以使用。由于测试环境限制,是否支持10用户以上暂时没有测试。安装过程是一个英文的文本文档,...

Bandwidth Splitter 1.37 for TMG 2010官方原版下载

Bandwidth Splitter v.1.39 for ISA Server 2004/2006